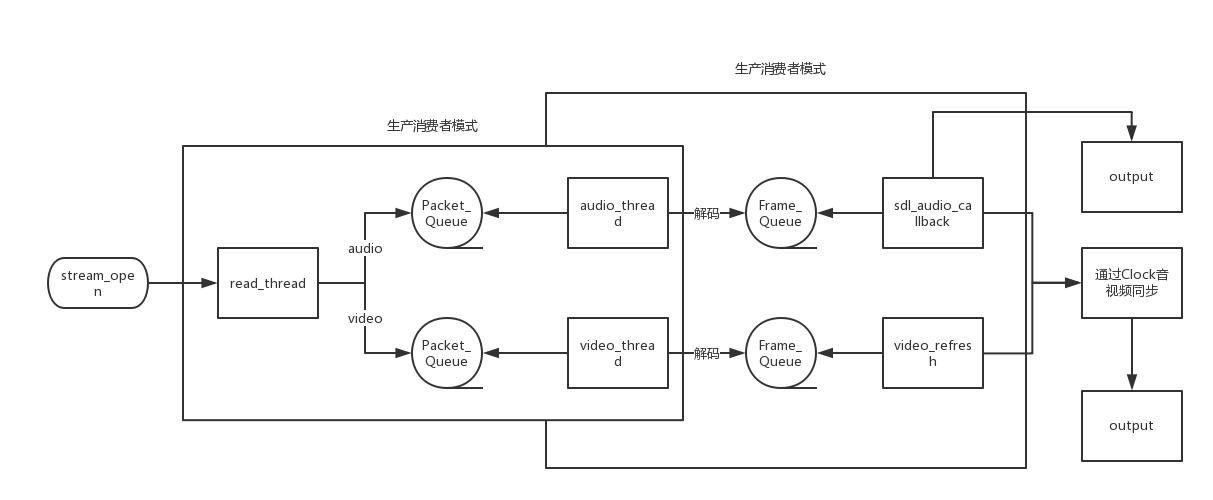

音频和视频是在独自线程中进行解码播放的,需要一个同步的操作来保证时间节点的一致性

音视频的同步策略

音频为主时钟(视频同步到音频)

视频为主时钟(音频同步到视频)

外部时钟(系统时间)为主时钟(视频、音频同步到外部时钟)

音视频各自为主时钟(视频和音频各自输出,不作同步处理)

在 ffplay 中实现了上述前 3 种的同步策略,默认以『音频为主时钟』

由sync参数控制:

1 { "sync" , HAS_ARG | OPT_EXPERT, { .func_arg = opt_sync }, "set audio-video sync. type (type=audio/video/ext)" , "type" },

引起音视频不同步的原因主要有两种:一种是音频和视频的数据量不一致而且编码算法不同所引起的解码时间差导致的不同步

Clock 1 2 3 4 5 6 7 8 9 typedef struct Clock { double pts; double pts_drift; double last_updated; double speed; int serial; int paused; int *queue_serial; } Clock;

pts pts 是 presentation timestamp 的缩写,即显示时间戳,用于标记一个帧的呈现时刻,在这里是 double 类型,其实就是已经转化为秒为单位的 pts 值,实际上 pts 的单位由 timebase 决定,timebase 的类型是结构体 AVRational(用于表示分数):

1 2 3 4 typedef struct AVRational{ int num; int den; } AVRational;

如timebase = {1, 1000} 表示千分之一秒,那么 pts = 1000,即为 1 秒,那么这一帧就需要在第一秒的时候呈现在 ffplay 中,将 pts 转化为秒,一般做法是:pts * av_q2d(timebase)

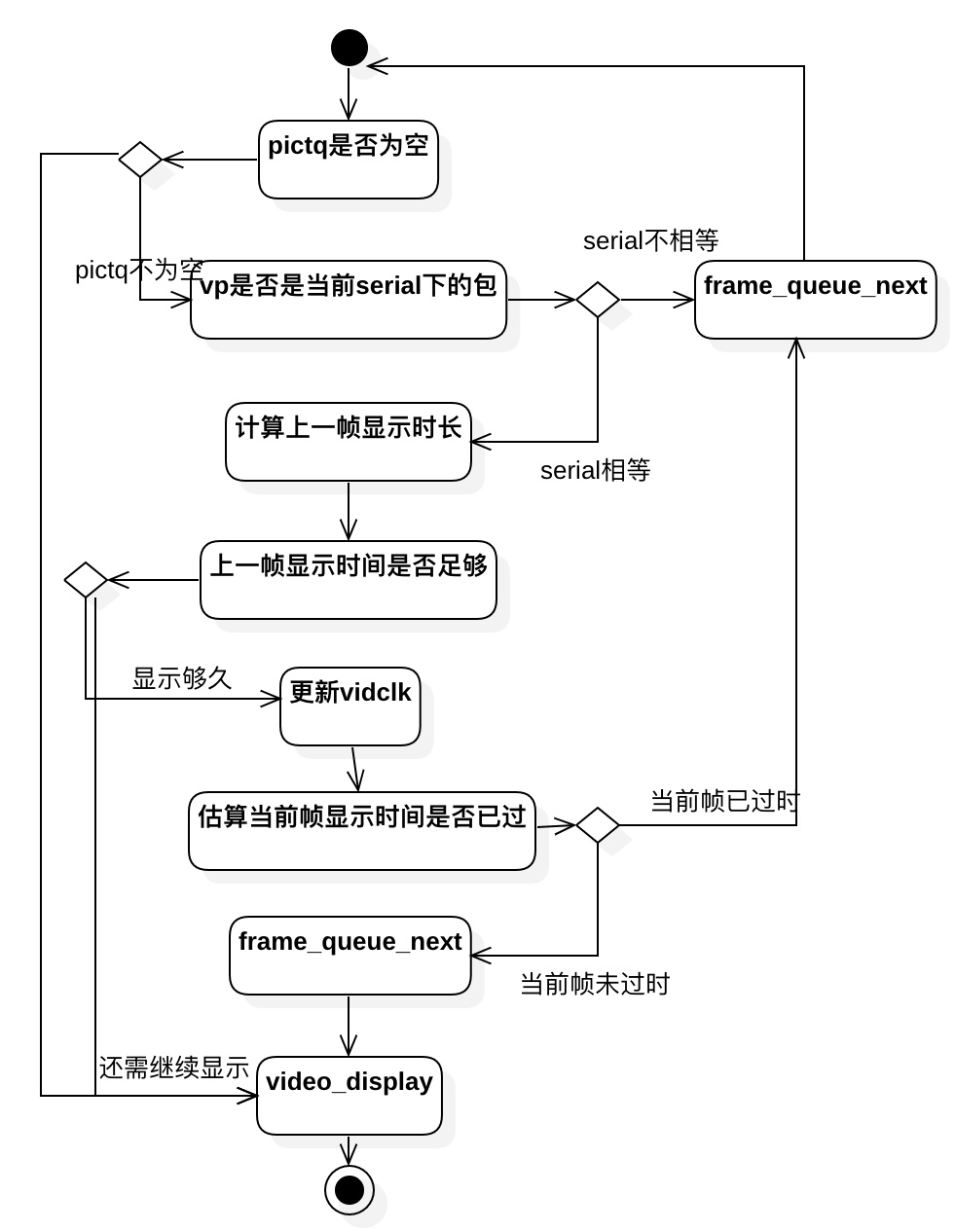

流程 如果视频播放过快,则重复播放上一帧,以等待音频;如果视频播放过慢,则丢帧追赶音频

在 stream_open 方法中,会对 clock 进行初始化

1 2 3 4 5 6 7 8 9 static VideoState *stream_open (FFPlayer *ffp, const char *filename, AVInputFormat *iformat) init_clock(&is->vidclk, &is->videoq.serial); init_clock(&is->audclk, &is->audioq.serial); init_clock(&is->extclk, &is->extclk.serial); }

视频数据 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 static int ffplay_video_thread (void *arg) AVFrame *frame = av_frame_alloc(); double pts; for (;;) { ret = get_video_frame(ffp, frame); pts = (frame->pts == AV_NOPTS_VALUE) ? NAN : frame->pts * av_q2d (tb) ; pts = pts * 1000 ; ret = queue_picture(ffp, frame, pts, duration, frame->pkt_pos, is->viddec.pkt_serial); } }

在 get_video_frame 获取解码的数据后,计算出 pts 也就是当前帧的播放时间 ,pts 的计算方式是 frame->pts * av_q2d(tb) 其中 tb 是 AVRational 结构体,是一个 timebase

1 2 3 4 5 6 7 8 9 10 11 12 static int queue_picture (FFPlayer *ffp, AVFrame *src_frame, double pts, double duration, int64_t pos, int serial) ... Frame *vp; if (!(vp = frame_queue_peek_writable(&is->pictq))) return -1 ; frame_queue_push(&is->pictq); }

AVFrame 的每次写入都要从 frame_queue 中获取一个 Frame

音频数据 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 static int audio_thread (void *arg) AVFrame *frame = av_frame_alloc(); Frame *af; do { if ((got_frame = decoder_decode_frame(ffp, &is->auddec, frame, NULL )) < 0 ) goto the_end; if (got_frame) { if (!(af = frame_queue_peek_writable(&is->sampq))) goto the_end; av_frame_move_ref(af->frame, frame); frame_queue_push(&is->sampq); } } while (ret >= 0 || ret == AVERROR(EAGAIN) || ret == AVERROR_EOF); }

过程和视频解码的差不多,同样是将解码出来的 AVFrame 赋值到 Frame 中

1 2 3 4 5 6 7 8 9 10 11 12 13 static int audio_decode_frame (FFPlayer *ffp) if (!(af = frame_queue_peek_readable(&is->sampq))) return -1 ; if (!isnan(af->pts)) is->audio_clock = af->pts + (double ) af->frame->nb_samples / af->frame->sample_rate; else is->audio_clock = NAN; }

在音频播放的方法里,每播放一帧都会得到这一帧的播放时间, 将其保存在 Video_State 这个结构体的 audio_clock 中,而音视频同步的计算是利用到此结构体

1 2 3 4 5 6 7 8 9 10 11 12 13 14 static void sdl_audio_callback (void *opaque, Uint8 *stream, int len) audio_size = audio_decode_frame(ffp); if (!isnan(is->audio_clock)) { set_clock_at(&is->audclk, is->audio_clock - (double )(is->audio_write_buf_size) / is->audio_tgt.bytes_per_sec - SDL_AoutGetLatencySeconds(ffp->aout), is->audio_clock_serial, ffp->audio_callback_time / 1000000.0 ); sync_clock_to_slave(&is->extclk, &is->audclk); } }

然后将得到的 audio_clock 通过一系列处理,保存到 Clock 结构体里面,其中 set_clock_at 的第二个参数最后得到的结果是当前帧播放的秒数

同步 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 static int video_refresh_thread(void *arg) { FFPlayer *ffp = arg; VideoState *is = ffp->is; double remaining_time = 0.0; while (!is->abort_request) { if (remaining_time > 0.0) av_usleep((int)(int64_t)(remaining_time * 1000000.0)); remaining_time = REFRESH_RATE; if (is->show_mode != SHOW_MODE_NONE && (!is->paused || is->force_refresh)) video_refresh(ffp, &remaining_time); } return 0; } /* called to display each frame */ static void video_refresh(FFPlayer *opaque, double *remaining_time) { FFPlayer *ffp = opaque; VideoState *is = ffp->is; double time; Frame *sp, *sp2; if (!is->paused && get_master_sync_type(is) == AV_SYNC_EXTERNAL_CLOCK && is->realtime) check_external_clock_speed(is); if (!ffp->display_disable && is->show_mode != SHOW_MODE_VIDEO && is->audio_st) { time = av_gettime_relative() / 1000000.0; if (is->force_refresh || is->last_vis_time + ffp->rdftspeed < time) { video_display2(ffp); is->last_vis_time = time; } *remaining_time = FFMIN(*remaining_time, is->last_vis_time + ffp->rdftspeed - time); } if (is->video_st) { retry: if (frame_queue_nb_remaining(&is->pictq) == 0) { // nothing to do, no picture to display in the queue } else { double last_duration, duration, delay; Frame *vp, *lastvp; // lastvp上一帧,vp当前帧 ,nextvp下一帧 /* dequeue the picture */ lastvp = frame_queue_peek_last(&is->pictq); vp = frame_queue_peek(&is->pictq); if (vp->serial != is->videoq.serial) { frame_queue_next(&is->pictq); goto retry; } // 跳帧处理 if (lastvp->serial != vp->serial) is->frame_timer = av_gettime_relative() / 1000000.0; if (is->paused) goto display; /* compute nominal last_duration */ last_duration = vp_duration(is, lastvp, vp); // 计算上一帧的持续时长 // 计算当前需要delay的时间 delay = compute_target_delay(ffp, last_duration, is); // 取系统时刻 time= av_gettime_relative()/1000000.0; if (isnan(is->frame_timer) || time < is->frame_timer) is->frame_timer = time; if (time < is->frame_timer + delay) { // 如果上一帧显示时长未满,重复显示上一帧 *remaining_time = FFMIN(is->frame_timer + delay - time, *remaining_time); goto display; } is->frame_timer += delay; // frame_timer 更新为上一帧结束时刻,也是当前帧开始时刻 if (delay > 0 && time - is->frame_timer > AV_SYNC_THRESHOLD_MAX) is->frame_timer = time; //如果与系统时间的偏离太大,则修正为系统时间 SDL_LockMutex(is->pictq.mutex); if (!isnan(vp->pts)) update_video_pts(is, vp->pts, vp->pos, vp->serial); // 修改 Clock,下次同步计算处理 SDL_UnlockMutex(is->pictq.mutex); // 丢帧逻辑 if (frame_queue_nb_remaining(&is->pictq) > 1) { Frame *nextvp = frame_queue_peek_next(&is->pictq); duration = vp_duration(is, vp, nextvp); // 当前帧显示时长 if(!is->step && (ffp->framedrop > 0 || (ffp->framedrop && get_master_sync_type(is) != AV_SYNC_VIDEO_MASTER)) && time > is->frame_timer + duration) { // 如果系统时间已经大于当前帧,则丢弃当前帧 frame_queue_next(&is->pictq); // 回到函数开始位置,继续重试 goto retry; } } // ... frame_queue_next(&is->pictq); is->force_refresh = 1; SDL_LockMutex(ffp->is->play_mutex); if (is->step) { is->step = 0; if (!is->paused) stream_update_pause_l(ffp); } SDL_UnlockMutex(ffp->is->play_mutex); } display: /* display picture */ if (!ffp->display_disable && is->force_refresh && is->show_mode == SHOW_MODE_VIDEO && is->pictq.rindex_shown) // 渲染视频 video_display2(ffp); } // ... }

最后就到了视频的渲染了,视频渲染的线程是 video_refresh_thread , remaining_time 是视频渲染线程需要 sleep 的时间也就是同步时间,单位是 us

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 static void video_display2 (FFPlayer *ffp) VideoState *is = ffp->is; if (is->video_st) video_image_display2(ffp); } static void video_image_display2 (FFPlayer *ffp) VideoState *is = ffp->is; Frame *vp; Frame *sp = NULL ; vp = frame_queue_peek_last(&is->pictq); if (vp->bmp) { if (ffp->render_wait_start && !ffp->start_on_prepared && is->pause_req) { if (!ffp->first_video_frame_rendered) { ffp->first_video_frame_rendered = 1 ; ffp_notify_msg1(ffp, FFP_MSG_VIDEO_RENDERING_START); } while (is->pause_req && !is->abort_request) { SDL_Delay(20 ); } } SDL_VoutDisplayYUVOverlay(ffp->vout, vp->bmp); } }

参考